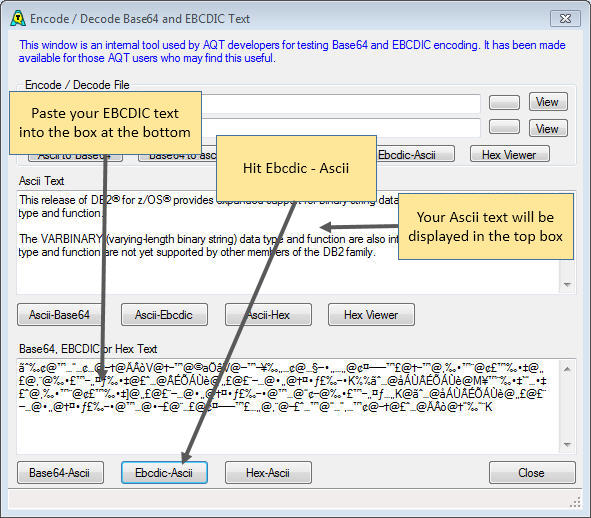

We have EBCDIC files that need to be converted to Ascii files. I am been unable to find a proper script to do this with PowerShell. Can someone help with this. I have a file with one word in EBCDIC on each line. I need to read through each record and convert the word to Ascii and store in a separate file. Please help if you know how this is done. If V does not display the EBCDIC file correctly, you can click on EBC on the status bar (or select EBCDIC Options from the View menu) to specify the correct file format. EBCDIC files are usually in one of 4 formats. Carriage Return Delimited. These files are just like ASCII files. Hi, We have huge number of mainframe files, which are in EBCDIC format. These files are created by mainframe systems. These files are now stored in HDFS as EBCDIC files. I have a need to read these files, (copy books available), split them into multiple files based on record type, and store them as ASCII files in HDFS.

EBCDIC to ASCII, and ASCII to EBCDIC converter tool

TextPipe Pro is a robust EBCDIC to ASCII conversion utility. Once your files are decoded from EBCDIC into an ASCII text file in CSV, Tab, XML, JSON or other delimited format, you can load them into a database, or extract the information for other uses. TextPipe translates the widest set of mainframe data, which can vary due to code pages, Cobol compiler dialect and mainframe host machine byte-ordering.

You can also use TextPipe to convert ASCII files to EBCDIC format.

| Convert Mainframe Files Now |

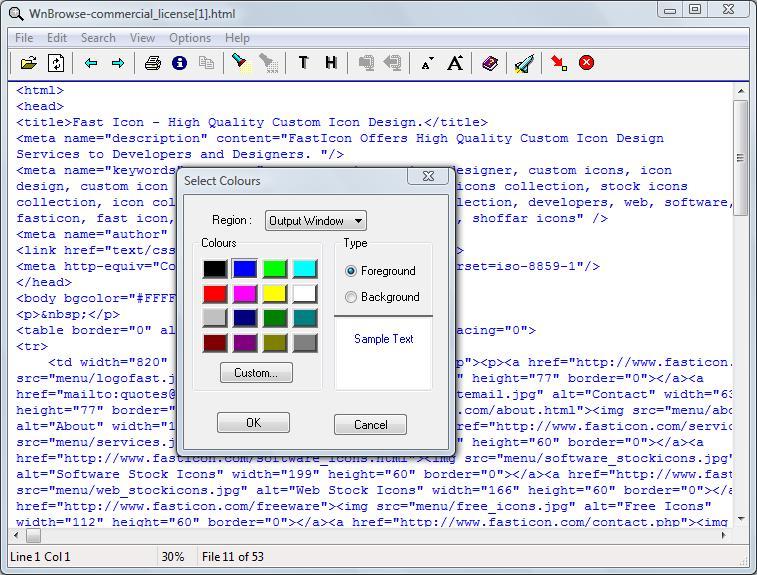

- WnBrowse - File Viewer. WnBrowse is a multifunction ASCII, EBCDIC and Hex file viewer. View large files, including files within zip archives. Search for text, hex and UNICODE strings. Print the entire file or a selected portion. Integrated with the Windows Explorer for two-click file browsing. Works with all versions of Windows.

- The EBCDIC File Viewer is a translator from the IBM EBCDIC encoding to ASCII. It is usefull when you have to process plain data from the Host without any linebreak. When you open a file it automatically tries to find out the fixed length width for files without linebreak. Also the viewer checks whether your file is EBCDIC or ASCII encoded.

Once you drag and drop your mainframe files into TextPipe, you choose which filters will be used to convert the file:

- Simple EBCDIC files with no structure. These can be converted using the Mainframe EBCDIC to ASCII filter.

- Fixed length mainframe files. Use the Mainframe EBCDIC to ASCII filter followed by the Convert End of Line Characters filter. For 132 column mainframe reports, set the fixed length to 133. To break the file down into fields/columns, just use the Fixed Width to Delimited Wizard.

- Mainframe CMS Format (variable line length files). Use the Convert End of Line Characters filter.

- Fixed record size (ie one segment) with a copybook (e.g. with packed and zoned decimals). Use the Mainframe Copybook filter and simply paste in your copybook. You can choose to output to CSV, Tab, XML, JSON, SQL Insert format, and using SQL Insert format, you can insert records directly into a database table.

- Multi-record (multiple segments) format. Use MainframeMainframe copybook master record filter with multiple MainframeMainframe copybook child record filters (see below) to split records into a different file for each record type. Output to CSV, Tab, XML, JSON, SQL Insert format, and using SQL Insert format, you can add a Database Filter to insert each record type into a different database table.

Sometimes TextPipe won't be able to parse your copybook - due to PIC clauses missing trailing periods, comments in the wrong spot, bizarre line breaks or field names etc. Please just send us the copybook or filter and we'll be happy to help.

When a Mainframe EBCDIC copybook consists of multiple records, you need to separate them out into a general structure, with each record type going to a new file for clarity and ease of loading/processing. TextPipe 11 makes this easy.

Sample Multi-Segment Mainframe EBCDIC Copybook

In our sample copybook below, the second field of every segment contains a 5 character record type id, T-REC_ID, which has values of #REC#, #TRAA, #TRAD etc. Fields beyond this are unique to each segment. Note: Often, the record type field's VALUES are not included in the copybook, but has to be read from the comments or documentation. In these cases the record type field usually has a constant name like REC-ID.

Multi-segment COBOL copybook conversion

With TextPipe 11+ (download), the steps to convert a multi-segment cobol file are:

- Open TextPipe, and drag and drop your binary file that you want to convert (this file is in EBCDIC, not ASCII)

- From the Filters to Apply tab, Add a Filter LibraryMainframeMainframe copybook master record, and set the general options such as the output format (CSV, Tab, Pipe, XML, ;-delimited, JSON, SQL Insert script)

- Set the name of the field that contains the Record Type e.g. T-REC-ID, RECORD-TYPE. This field in each segment tells TextPipe which segment type is being processed

- Set the base output filename - this is the base folder and file that will be used for each segment. The name of the file will be base output filename plus the Segment Type

- Add one or more Filter LibraryMainframeMainframe copybook child record as subfilters (ie inside) the Mainframe copybook master record. You can drag child filters inside the master filter. These child filters inherit settings from their parent.

- In each child record), set the child record type (e.g. #REC#, #TRAA, #TRAD, A, B, C, 01, 02, 03) and paste in the associated copybook. If TextPipe complains about the format of your copybook, please contact support.

When a Mainframe EBCDIC copybook consists of multiple records, you need to separate them out into a general structure, with each record type going to a new file for clarity and ease of loading/processing. TextPipe makes this easy.

Here is how it looks:

This results in the followings files being output

- User's Desktop#REC#.csv - #REC# segments

- User's Desktop#TRAA.csv - #TRAA segments

- User's Desktop#TRAB.csv - #TRAB segments

- User's Desktop#TRAC.csv - #TRAC segments

- User's Desktop#TRAD.csv - #TRAD segments

| Convert Mainframe Files Now |

Mainframe EBCDIC copybook filter design pattern

Sometimes the segment types can be a range of values, like 00-30, which could require a huge number of child records to be added. An alternative approach is available which allows you to use a pattern match to match the record type. With TextPipe (download), the steps are

- Use an EasyPattern match to identify each record type we have in the file – using the record header

- Pass this record header with its associated data to a subfilter

- The subfilter handles just that record type, and puts each record type in a new file.

In our sample file, each record starts with a 3 byte field which we will ignore, followed by a 5 letter record type id, #REC#, #TRAA, #TRAD etc. There is also a field following this record id, but we don't need it, and the value might not always be 'N'. Note: Often, the record type field's VALUES are not included in the copybook, but has to be read from the comments or documentation. In these cases the record type field usually has a constant name like REC-ID.

Sample Mainframe Copybook

Records

The general TextPipe pattern for each record type within the file looks like this:

Let's dig into this.

The first step is an EasyPattern (a user-friendly type of pattern match). It looks for

- The start of the text, followed by

- Any 3 characters (the PIC S9(4) COMP field we are ignoring), followed by

- the EBCDIC characters #TRTS. (Remembering that PC's use ASCII, we need to specify the literal is EBCDIC), followed by

- 65 other characters – the rest of the record

When something matches this pattern, it must be a record of type #TRTS, and it gets passed unchanged to the subfilter:

- The Mainframe Copybook filter applies just the copybook segment that relates to the #TRTS record

- The result goes to the file TRTS-records.txt on your desktop

- The text is then prevented from continuing in the filter – it stops dead here.

Pro Tip: We can easily copy this structure by selecting the top filter, and choosing the [x2] button in the footer.

Working out the copybook size

When we paste each copybook fragment into a mainframe copybook filter, we can click the [Show Parse Tree] button to find out the record length. e.g.

Once we know the length is 73, we just subtract 8 from it, (the first 3 characters followed by the 5 character record type = 8) to find out the remaining characters we need, in this case, 65:

We also have to grab the record type TRAA from the copybook and put it into the pattern match, and also into the output filename.

Ebcdic File Viewer Free

Then we move onto the next record type.

Master record match

Once we have setup all the record types, we need to gather ALL the record patterns into one MASTER pattern match at the top of the list.

The master copybook pattern match looks like this:

Ready to Rock 'n' Roll!

Then you're ready to drag and drop your mainframe file into TextPipe and click [Go] or press [F9]!

Errors?

If the error log reports that there were extra bytes in the file that could not be matched, this indicates that

- the pattern matches are incorrect

- the copybooks are incorrect. If you are converting a single record type, check that the file type is an exact multiple of the record size from the [Show Parse Tree] option. If you know you have just a single record type, you can use a website to get the prime factors of the file size to work out a likely copybook size.

- the settings used by the mainframe copybook filter are wrong. Try experimenting with the settings 'Assume adjacent COMP fields are overlapped' and 'Allow COMP-3 to be unsigned' of the most common or initial record type. Unfortunately the copybook does not tell us how exactly the data has been stored.

- the original data or original copybook is wrong

- the original data might have been converted to ASCII when it was transferred off the mainframe, and this is the most common problem we see. This leads to corrupted packed decimal values, so always ensure that the file you receive is FTPed off the mainframe in binary mode.

May the force be with you!

Automation

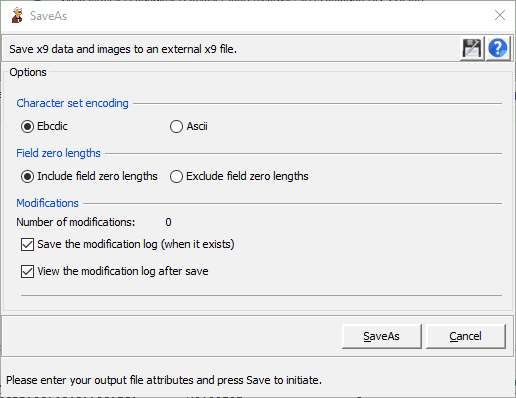

Once tested, the TextPipe filter can be run automatically or on a schedule from the command line. It can also be controlled by other software using COM.

Need Help With Your Copybook?

Please drop us an email with your copybook support2@datamystic.com

|

In the first article in this series, we introduced EBCDIC code pages, and how they work with z/OS. But z/OS became more complicated when UNIX Systems Services (USS) introduced ASCII into the mix. Web-enablement and XML add UTF-8 and other Unicode specifications as well. So how do EBCDIC, ASCII and Unicode work together?

If you are a mainframe veteran like me, you work in EBCDIC. You use a TN3270 emulator to work with TSO/E and ISPF, you use datasets, and every program is EBCDIC. Or at least that's the way it used to be.

UNIX Systems Services (USS) has changed all that. Let's take a simple example. Providing it has been setup by the Systems Programmer, you can now use any Telnet client such as PuTTY to access z/OS using telnet, SSH or rlogin. From here, you get a standard UNIX shell that will feel like home to anyone who has used UNIX on any platform. telnet, SSH and rlogin are ASCII sessions. So z/OS (or more specifically, TCP/IP) will convert everything going to or from that telnet client between ASCII and EBCDIC.

Like EBCDIC, expanded ASCII has different code pages for different languages and regions, though not nearly as many. Most English speakers will use the ISO-8859-1 ASCII. If you're from Norway, you may prefer ISO-8859-6, and Russians will probably go for ISO-8859-5. In UNIX, the ASCII character set you use is part of the locale, which also includes currency symbol and date formats preferred. The locale is set using the setenv command to update the LANG or LC_* environment variables. You then set the Language on your Telnet client to the same, and you're away. Here is how it's done on PuTTY.

From the USS shell on z/OS, this is exactly the same (it is a POSIX compliant shell after all). So to change the locale to France, we use the USS setenv command: The first two characters are the language code specified in the ISO 639-1 standard and the second two the country code from ISO 3166-1.

Converting to and from ASCII on z/OS consumes resources. If you're only working with ASCII data, it would be a good idea to store the data in ASCII, and avoid the overhead of always converting between EBCDIC and ASCII.The good news is that this is no problem. ASCII is also a Single Byte Character Set (SBCS), so all the z/OS instructions and functions work just as well for ASCII as they do for EBCDIC. Database managers generally just store bytes. So if you don't need them to display the characters in a readable form on a screen, you can easily store ASCII in z/OS datasets, USS files and z/OS databases.

The problem is displaying that information. Using ISPF to edit a dataset with ASCII data will show gobbldy-gook - unreadable characters. ISPF browse has similar problems.

With traditional z/OS datasets, there's nothing you can do. However z/OS USS files have a tag that can specify the character set that used. For example, look at the ls listing of the USS file here:

You can see that the code page used is IBM-1047 - the default EBCDIC. The t to the left of the output indicates that the file holds text, and T=on indicates that the file holds uniformly encoded text data. However here is another file:

The character set is ISO8859-1: extended ASCII for English Speakers. This tag can be set using the USS ctag command. It can also be set when mounting a USS file system, setting the default tag for all files in that file system.

This tag can be used to determine how the file will be viewed. From z/OS 1.9, this means that if using ISPF edit or browse to access a dataset, ASCII characters will automatically be displayed if this tag is set to CCSID 819 (ISO8859-1). The TSO/E OBROWSE and OEDIT commands, ISPF option 3.17, or the ISHELL interface all use ISPF edit and browse.

If storing ASCII in traditional z/OS datasets, ISPF BROWSE and EDIT will not automatically convert from ASCII. Nor will it convert for any other character set other than ISO8859-1. However you can still view the ASCII data using the SOURCE ASCII command. Here is an example of how this command works.

Some database systems also store the code page. For example, have a look at the following output from the SAS PROC _CONTENTS procedure. This shows the definitions of a SAS table. You can see that it is encoded in EBCDIC 1142 (Denmark/Norway):

DB2 also plays this kind of ball. Every DB2 table can have an ENCODING_SCHEME variable assigned in SYSIBM.TABLES which overrides the default CCSID. This value can also be overridden in the SQL Descriptor Area for SQL statements, or in the stored procedure definition for stored procedures. You can also override specify CCSID when binding an application, or in the DB2 DECLARE or CAST statements.

Or in other words, if you define tables and applications correctly, DB2 will do all the translation for you.

Unicode has one big advantage over EBCDIC and ASCII: there are no code pages. Every character is represented in the same table. And the Standards people have made sure that Unicode has enough room for a lot more characters - even the Star Trek Klingon language characters get a mention.

But of course this would be too simple. There are actually a few different Unicodes out there:

- UTF-8: Multi-Byte character set, though most characters are just one byte. The basic ASCII characters (a-z, A-Z, 0-9) are the same.

- UTF-16: Multi-byte characters set, though most characters are two bytes.

- UTF-32: Each character is four bytes.

Most high level languages have some sort of Unicode support, including C, COBOL and PL/1. However you need to tell these programs that you're using Unicode in compiler options or string manipulation options.

z/OS also has instructions for converting between Unicode, UTF-8, UTF-16 and UTF-32. By Unicode, IBM means Unicode Basic Latin: the first 255 characters of Unicode - which fit into one byte.

A problem with anything using Unicode on z/OS is that it can be expensive in terms of CPU use. To help out, IBM has introduced some new Assembler instructions oriented towards Unicode. Many of the latest high level language compilers use these when working with Unicode instructions - making these programs much faster. If you have a program that uses Unicode and hasn't been recompiled for a few years, consider recompiling it. You may see some performance improvements.

z/OS is still EBCDIC and always will be. However IBM has realised that z/OS needs to talk to the outside world, and so other character encoding schemes like ASCII and Unicode need to be supported - and are.

Ebcdic File Viewer

z/OS USS files have an attribute to tell you the encoding scheme, and some databases systems like SAS and DB2 also give you an attribute to set. Otherwise, you need to know yourself how the strings are encoded.

This second article has covered how EBCDIC, ASCII and Unicode work together with z/OS. In the final article in this series of three. I'll look at converting between them.